Kerrisdale Capital is short shares of Pure Storage Inc (NYSE:PSTG), a $27 billion market cap enterprise storage company.

After riding the all-flash array adoption wave in the enterprise market, Pure finds itself with a modest growth rate, declining competitive differentiation, and a product portfolio poorly positioned to capitalize on cloud or AI infrastructure spend. Since its core product has little chance of hyperscaler adoption, Pure has crafted a story suggesting its new quad-level cell (QLC) flash-based products will conquer the hard disk drive (HDD). However, little of the Pure pitch is grounded in reality, and in fact, much of what Pure spouts completely ignores the fundamentals of hyperscaler storage architectures and economics.

Seeking to demonstrate hyperscaler traction in any form whatsoever, the company recently announced the license of its software to Meta, which was met with cheers from bulls. But the capabilities provided by Pure are narrow and replicable, and Meta will not be purchasing Pure products – instead buying flash memory directly and using its ODMs to assemble white box hardware – and the engagement has not been expanded to Meta’s other data centers. The prospects for wins at other major hyperscalers are dim given differing technology stacks and development approaches. Most importantly, for AI-centric applications, the surging wave of ultra-high performance data infrastructure players such as DDN, WEKA, VAST Data, and Hammerspace will win that market over Pure, leveraging commodity hardware no less. Indeed, Pure is deriving little benefit from AI spend.

Pure claims TCO analyses suggest that its Pure//E product is a no-brainer, yet why haven’t the world’s most sophisticated IT buyers purchased it hand over fist? We construct our own TCO analysis in this report and calculate HDDs retain a 5-6x TCO advantage versus flash-based solutions, a conclusion supported by hyperscaler storage architects with whom we spoke.

In its core enterprise storage market, Pure’s products are undifferentiated and its market share has been stagnant for years. And if you believe enterprise workloads will continue migrating to the cloud, then Pure’s core business is a melting ice cube. Pure has been a great beneficiary of all-flash array adoption in the enterprise, but that market has matured and now AFA revenues are projected to grow at single-digit rates. Given the relatively small size and modest growth prospects of the AFA market and the predominance of large IT hardware OEM competitors (such as Dell, Hewlett Packard Enterprise, NetApp, and others), Pure’s best growth years are behind it. A former Pure employee described competition in Pure’s core market as “knife fights in phone booths… you're not really taking share any longer; you're just trading share amongst those three primary players.” An enterprise IT reseller compared the top vendors as choosing between “Toyota, Honda, and Nissan… they're really close.”

As for Pure’s “software” business, it’s largely maintenance, support, and pre-purchased upgrade revenue that doesn’t deserve the SaaS valuation multiple bulls try to ascribe it. Nothing about the Pure Storage story deserves a premium valuation multiple anymore. A former tech darling that’s become tired and legacy, the purest thing about PSTG shares is pure downside.

I. Investment Highlights

None of the flash-based solution providers can present an honest total cost of ownership (TCO) analysis. HDDs dominate at hyperscale because the math just works. There is a massive disconnect between the Pure marketing message that flash will displace HDDs in the data center and reality. About 1,050EB of HDDs were sold into data centers last year compared to the ~15EB that Pure shipped in total from FY2016 to FY2025. Most of the 235EB of flash currently shipping into data centers is for server cache as opposed to bulk storage. The fact that flash has effectively won in the enterprise storage market says little about its prospects for penetrating hyperscaler implementations that have completely different technology and economic parameters. Because flash-based solutions offer high performance, investors sometimes assume they must surely align with applications such as AI. Yet, AI represents a limited share of hyperscaler workloads. In contrast to the flash camp’s pitch, we suspect many investors would be surprised just how widely used HDDs are, even for high performance applications such as AI. More broadly, hyperscalers deliver a breadth of services and applications at such a massive scale that their infrastructure decisions and economics differ greatly from those of even the largest enterprises.

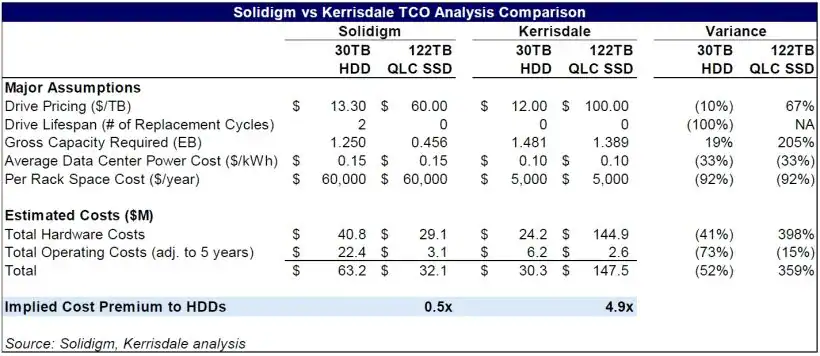

We believe Pure and solid-state drive (SSD) suppliers have tried to hoodwink the market by presenting fairly dishonest total cost of ownership (TCO) analyses. Pure has gone so far as to suggest that its products are lower cost, even if the HDDs were given away for free. If their TCO conclusions were correct, hyperscalers such as Google and Amazon, possibly the most technologically and financially sophisticated buyers of technology hardware on the planet, would surely be buying more Pure products, but they aren’t. Pure and others play games with their TCO analyses, such as assuming enterprise-like, high performance applications, high levels of data reduction (which drastically reduces the amount of required capacity), imaginary GPU utilization penalties to burden HDDs, or other factors not representative of hyperscale implementations. Pure hasn’t made a credible attempt to transparently explain their TCO analysis, so we critique the most detailed analysis we’ve seen, by SSD supplier Solidigm, and construct our own TCO analysis.

Similar to Pure, Solidigm’s TCO analysis alleges a massive cost advantage relative to HDDs. Unlike Pure, however, Solidigm actually discloses its assumptions to allow a detailed review of its methodology. The Solidigm TCO analysis contains a number of unrealistic assumptions, including (1) incorporating the benefits of using VAST Data software without including the associated costs, (2) assuming drive lifespans that necessitate two full HDD refreshes, (3) reducing the gross SSD capacity required based on data reduction assumptions inconsistent with hyperscaler practice, and (4) assigning unrealistic space costs to HDDs that represent 36% of their TCO. Adjusting for these distortions and recognizing that space and power differences have little impact on the overall TCO math, flash-based solutions suffer a 5-6x TCO disadvantage relative to HDDs. This assessment reconciled with the opinions of numerous hyperscaler storage specialists with whom we spoke. If anything, we believe Pure investors should review the Solidigm analysis to see the competitive threat posed by VAST Data’s software, as it largely nullifies Pure’s assumed advantages over commodity SSDs.

Worsening access density is not limiting HDD usage, even for AI-related applications. According to the pro-flash camp, because HDD IOPS (or the bandwidth for moving data on or off the drive) are constrained by the standard platter speed of 7,200 RPM, as HDDs increase in capacity, by definition, they experience declining MBps/TB. We don’t quarrel with the math, but our research suggests that this practical limitation is not negatively affecting the use of HDDs in anything other than the highest performance applications. The massive scale at which hyperscalers consolidate data and workloads allows them to dynamically optimize the operations and economics of data storage, movement, and processing. And if the hyperscalers couldn’t manage around the access density issue, wouldn’t they seek HDD products that address the issue such as dual-actuator or lower capacity drives?

As a corollary to the access density argument, there is a tendency to frame the flash versus HDD debate purely through the lens of AI or, even more narrowly, AI model training. With the exception of certain types of low latency data, much of the data used for model training is stored at some point on HDDs. The notion that HDDs cannot ensure full GPU utilization is a scare tactic used by the pro-flash camp to appeal to the GPU-obsessed market. Any practical HDD limitation can be easily managed through the use of a layer of flash cache, even for the largest and highest performance AI applications. The real arbiter of GPU utilization is the server cache sitting next to the GPU and the high-bandwidth DRAM (HBM) sitting inside the GPU package. We suspect many investors greatly underestimate the use and value proposition of HDDs in AI-adjacent storage. In fact, in a recent Tegus interview, a Meta hardware engineer remarked that, after an initial surge of flash usage, the mix of HDDs used in AI applications is actually increasing given the superior cost economics. Even the designers of the highest performance storage systems such as DDN still offer hybrid solutions due to their superior cost-effectiveness.

Flash production economics will likely inhibit widespread flash adoption. Over the past ten years, flash production has been 9x more capital intensive than HDD manufacturing, and the continual advances required to push flash scaling will be increasingly expensive. The industry would need to invest an incremental $200 billion to build the capacity to displace anticipated HDD exabyte shipments in 2027. However, the flash industry’s actions in 2025 to date have given little indication that major suppliers subscribe to Pure’s view that an explosion in flash demand is imminent. If anything, major suppliers such as SK hynix and Micron have been reducing flash manufacturing capacity, choosing instead to shift capacity to the booming market for HBM. Moreover, flash industry cost scaling is slowing. It has taken nearly a decade to achieve each subsequent increase in the number of bits stored per cell, and the advent of 3D structures has caused costs and manufacturing complexity to balloon.

We believe excitement around Pure’s hyperscaler opportunity is misplaced. Pure disclosed in December 2024 that Meta will license its Purity operating software for use in the storage infrastructure in one of its AI-focused data centers. And while Meta will adopt its hardware architecture, they will not be purchasing Pure products. In the absence of any disclosure about the deal’s structure and economics, analysts have let their imaginations run wild. We see a high risk of investor disappointment. Meta’s own engineers have stressed that QLC flash is not yet price competitive for broad-based deployment, and Pure has acknowledged that the engagement has not been expanded to other data centers. More importantly, our conversations with several leading hyperscaler storage architects suggest the capabilities provided by Pure are narrow and replicable and that defending its initial win may prove difficult.

In addition, based on the differing technology stacks and development approaches, we believe the prospects for wins at other major hyperscalers are dim. To the extent Pure’s solution is best tailored for AI-centric applications, we believe the surging wave of ultra-high performance data infrastructure players such as DDN, WEKA, VAST Data, and Hammerspace will drown out Pure’s fledgling hyperscaler toehold. VAST Data is rumored to be in discussions to raise money from Google and NVIDIA at a $30 billion valuation because they are a real AI enabler. Not only do companies like VAST Data disprove the Pure marketing pitch of differentiated flash expertise and architectural superiority, they achieve their impressive results at scales that dwarf Pure’s largest enterprise implementations. Thus, despite getting painted with the “high performance” brush, Pure is poorly positioned to benefit from the AI-driven spending wave. Ultimately, the Meta deal may represent an ominous foreshadowing of Pure’s declining value proposition, increasing hyperscaler disintermediation, and splintering of Pure internal engineering efforts.

Pure’s unsuccessful pivot to hyperscalers shines a brighter light on its core enterprise products, and we don’t like what we see. We don’t deny that Pure is a credible vendor that has successfully abstracted away a lot of the complexity around storage and innovated a model of non-disruptive upgrades with its Evergreen//Forever offering. Despite the company’s respectable execution, Pure’s historical growth has been more a function of the secular shift from hybrid/disk-based systems to all-flash arrays as opposed to material gains in market share. Pure’s unfortunate market reality is that its primary competitors such as Dell, Hewlett Packard Enterprise, NetApp, and IBM continue to benefit from massive scale and product breadth (spanning storage, security, networking, and associated software), compelling solution bundling, dominant R&D investment, superior corporate access, and the often undeniable force of incumbency. Arguably, NetApp is more deserving of investor attention, with superior hyperscaler penetration, broader application coverage, and a similar software advantage with its ONTAP offering. By contrast, Pure has failed to distinguish itself in hyperscaler applications, its traditional focus on structured data has resulted in a narrower perceived breadth of application expertise (particularly as it regards AI that leverages unstructured data), and whatever advantages it once enjoyed with its Evergreen//Forever maintenance plan have been nullified by the introduction of copycat offerings from competitors. Finally, Pure’s cloud storage offering Evergreen//One is neither differentiated nor representative of material addressable market expansion. Taken together, we find it hard to escape the conclusion that Pure is not well positioned for either cloud migration or high performance AI applications.

We anticipate declining revenue growth and increasing margin pressures. 2024 and 2025 represented two of Pure’s three lowest revenue growth fiscal years, and we highlight that Pure’s history of consistent >25% YoY growth in remaining performance obligation has suffered a step function decline over much of the past six quarters. Pure’s attractive gross margins, which have exceeded 70% over five of the past six fiscal years, have long served as justification to view Pure as deserving of SaaS-like valuation multiples. However, to the extent Pure is at all successful penetrating the hyperscaler market with its mid-forties gross margin Pure//E products targeting HDD displacement, corporate gross margins are sure to suffer, which should be a harbinger for valuation multiple contraction. We also question the investor inclination to consider Pure’s Evergreen revenues as deserving of SaaS-like valuation credit. In reality, Evergreen//Forever and Evergreen//Flex simply represent the prepayment of maintenance and support fees and future hardware upgrades, and Evergreen//One is a subscale cloud storage platform. More concerning, we do not believe Pure’s disclosures help investors adequately understand the capital requirements and accounting assumptions underlying these offerings, and we suspect Pure has done its best to minimize the income statement impact of costs related to its subscription services.

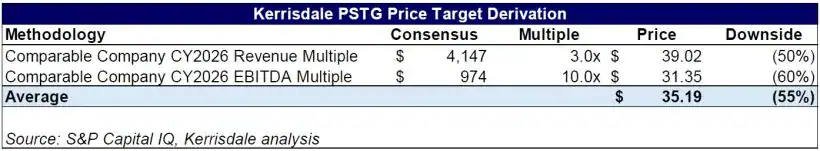

Pure’s premium valuation is undeserved. Pure’s perception as a next-generation supplier of high performance products has pushed its valuation to new highs in the current AI-obsessed market. However, the practical reality is that Pure lacks leverage to AI spending, and the massive cloud migration underway may actually result in net harm to its enterprise business. Some analysts flatter their price targets by suggesting Pure should be ascribed SaaS valuation multiples. Very little of Pure’s support business is really “software” or “software-like”, as it is contingent on hardware, far less sticky, and burdened by a substantial embedded capital expenditure requirement. At best, we believe Evergreen//One should be viewed as roughly equivalent to a small-scale cloud service or storage provider, such as Backblaze, Digital Ocean, or Dropbox, companies that trade at EBITDA multiples about 60% lower than where Pure trades. Ultimately, Pure is a storage company, and despite all the secular drivers propelling the global need for storage products, investors have consistently ascribed storage companies lower multiples than those with other, albeit somewhat similar, end market exposures due to the sector’s history of commoditization and competition. As much as some investors want to view Pure as something different, the company’s peers are its direct competitors, and NetApp in particular. Some may protest since NetApp’s headline revenue growth is burdened by its large legacy hybrid systems business and public sector exposure, but NetApp generates ~12,000bps higher operating margins while offering a stronger AI and hyperscaler story than Pure. Despite this, Pure trades at an obscene premium to NetApp.

We foresee a reversion to Pure’s historical 3.0x revenue multiple, representing 50% downside from current. With well over $3 billion in LTM revenue, we believe Pure should trade at peer company profit multiples based on its current margin structure as opposed to some theoretical scenario where it magically achieves NetApp’s vastly superior margin profile. Analysts need to come to terms with the fact that Pure simply may not have the operating leverage they wish it had. Thus, valuing Pure’s consensus CY2026 EBITDA at NetApp’s 10x multiple yields 60% downside. Averaging these two methodologies yields a $35 per share price target representing 55% downside.

Our short call is even more timely after Pure’s recent FQ2 earnings report, which sent its shares up over 30%. For much of the past three years, consensus revenue estimates have aligned with Pure’s guidance, and the company has usually posted modest 1-2% beats, not much different from this quarter’s 2% outperformance. Pure management also modestly increased its FY2026 revenue guidance by 3%, which sounded more like management correcting for excess conservatism due to macro concerns when their initial guidance was released in May than any newfound growth. And despite the revenue beat and raise, neither the recent quarter nor revised guidance showed any signs of long-awaited operating leverage. In fact, very little of what Pure disclosed contained any confirmatory data points surrounding the major elements of the bull thesis. Some of the strength came from Pure’s niche Portworx offering, and management largely evaded an analyst question regarding Series//E traction. Commentary regarding Meta merely met previous guidance, and management was noticeably conservative and noncommittal as it regards new hyperscaler wins. While Pure may not be saddled with public sector exposure like its peers, it remains stagnant in the AFA market, where NetApp reported it gained the #1 position in Q1 based on IDC data. And speaking of NetApp, investors who believe Pure is alone in disrupting enterprise storage should listen to the NetApp earnings call, as the CEO reviews of customer wins were virtually identical with the exception of NetApp deriving more substantial benefits and traction from AI-centric applications. Overall, nothing we heard changed our view of Pure’s increasingly challenged competitive position.

Read the full report here by Kerrisdale Capital