Antero Peak Strategy's commentary for the second quarter ended June 30, 2025.

“I don't want an organization of order-takers because order-takers don't take responsibility for results... Innovation and progress are achieved only by those who venture beyond standard operating procedure.” - D. Michael Abrashoff, “It’s Your Ship”

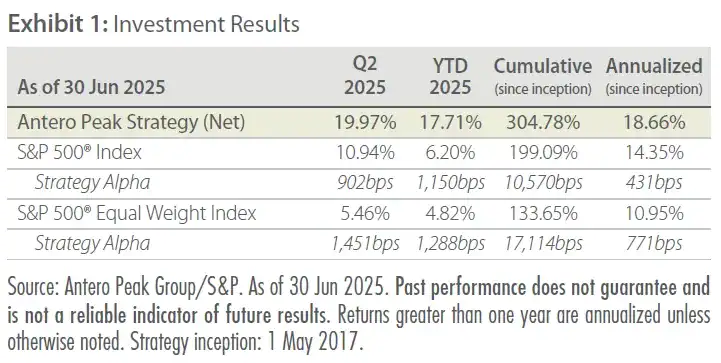

In Q2, the Antero Peak Strategy (net) outperformed the S&P 500® Index by 902bps. This follows a strong 2024 and Q1 2025, in which the Strategy outperformed the benchmark by 637bps and 239bps, respectively. We are pleased with our continued strong performance in the first half of 2025, having achieved YTD relative outperformance versus the S&P 500® Index of 1,150bps.

Despite an ever-swinging pendulum of macro conditions—tariffs/no tariffs, inflation/no inflation, rate cuts/no cuts, risk-on/risk-off, global unrest/world peace—we believe our portfolio and risk management execution have shown great consistency in 2025.

Performance Review

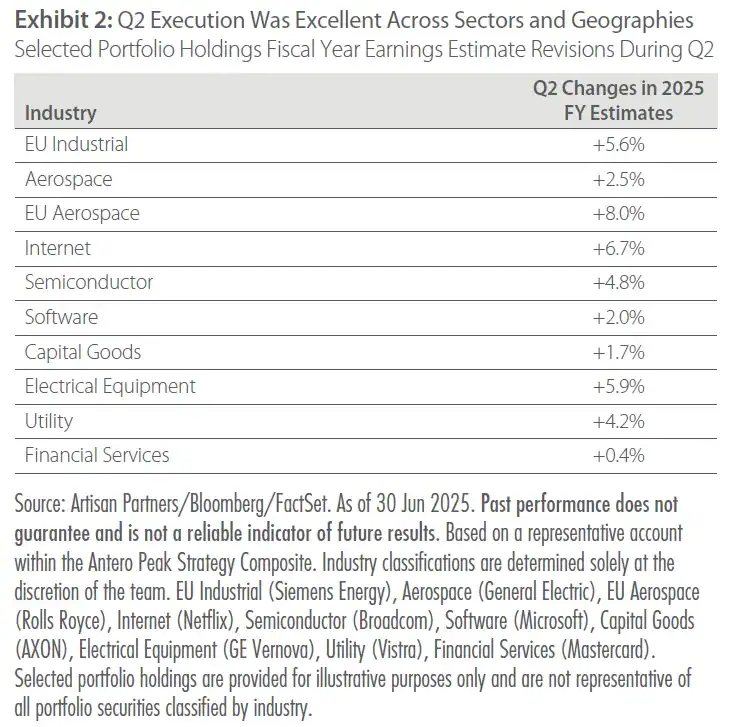

Once again, our results were tied to the fundamental performance of our underlying investments. Top contributors in Q2 included Microsoft, General Electric, Netflix, Broadcom, Rolls Royce and Siemens Energy. During the quarter, these companies experienced sharp positive earnings revisions across the board. This stood in stark contrast to the S&P 500® Index, which saw 2025 estimates revised 3.6% lower. Our portfolio, on a blended average basis, experienced +4.6% upward revisions, with the majority of our portfolio companies faring better than the benchmark.

Bottom contributors for Q2 included Equinix, Crown Castle, Apollo Global Management, Primo Brands, Safran SA and VanEck Semiconductor ETF. The absolute impact of our “losers” was mild, with only one stock impacting us by more than 50bps (VanEck Semiconductor ETF). This led to a very strong slugging ratio of 2.5 (the ratio of dollars gained on “winners” versus dollars lost on “losers”) and a Sharpe ratio at 4X that of the benchmark. Good risk management was also on display in Q2—we had just 76% downside into the market lows on April 8, and as markets recovered, we experienced 120% upside capture.

The outlook for the portfolio remains remarkably strong, in our view. Based on consensus estimates, our holdings are projected to outgrow the S&P 500® Index by approximately 4% in the second half of 2025. Our research also supports a blended 5% upward revision to consensus earnings estimates for our portfolio companies over the same period. At the same time, we see downside risk to overall S&P 500® Index EPS, which has continued to trend lower as of this writing—particularly for 2026 and 2027. This dynamic sets the stage for a widening relative growth gap that could approach double digits in the second half of 2025. Meanwhile, our portfolio has continued to generate twice the return on invested capital (ROIC) of the index, with a slightly lower cost of capital.

Overall, we’re very pleased with our execution across both 2024 and the first half of 2025. Our process has shown strong performance across a variety of elements, including modeling accuracy, sector allocation and risk management.

A Culture of Accountability

At just 36 years old, Michael Abrashoff was appointed commander of the USS Benfold, a US Navy destroyer equipped for anti-aircraft warfare and among the first vessels fitted with the Aegis Ballistic Missile Defense System. By 2010, the Benfold became the first ship capable of simultaneously defending against both ballistic and cruise missiles.

When Abrashoff took command, the Benfold was one of the worst performing ships in the Navy. Within a year, it became the best. He accomplished this remarkable feat by transforming the ship’s culture. He listened intently to his crew, eliminated bureaucratic friction and empowered every sailor to take ownership of the mission.

The title of his book, “It’s Your Ship,” captures this ethos. Abrashoff encouraged each sailor to act as though they were the captain—to make decisions, speak up and be accountable. He removed the traditional command-and-control mindset and replaced it with decentralized leadership rooted in trust, humility and clear expectations. That mindset resonates deeply with our team. In high-performance environments— whether on a destroyer or in an investment team—culture drives outcomes. Ownership, humility and a shared sense of purpose are not just ideals; they’re strategic edges just as much as good models are.

“Show me an organization in which employees take ownership, and I will show you one that beats its competitors.” - D. Michael Abrashoff, “It’s Your Ship.”

When I founded the Antero Peak Group in 2016, we started with just four senior investment team members. Today, we are all still here. The continued presence of these core team members speaks not only to their individual talent and commitment, but also to the culture we’ve built— one rooted in trust, mutual respect and shared ownership.

We’ve created an environment where people feel empowered, accountable and invested in the outcome—not just their own, but the team’s and the firm’s. That sense of alignment is intentional. We don’t separate performance from culture; we believe the two are inseparable. People who thrive here think like owners, take initiative and hold themselves to the highest standards.

The longevity of our core team isn’t accidental—and it’s not in spite of the high bar we set. It’s because of it. High expectations attract those who want to be challenged and who take pride in building something lasting. That’s the foundation we started with, and it remains central to who we, the Antero Peak Group, are today.

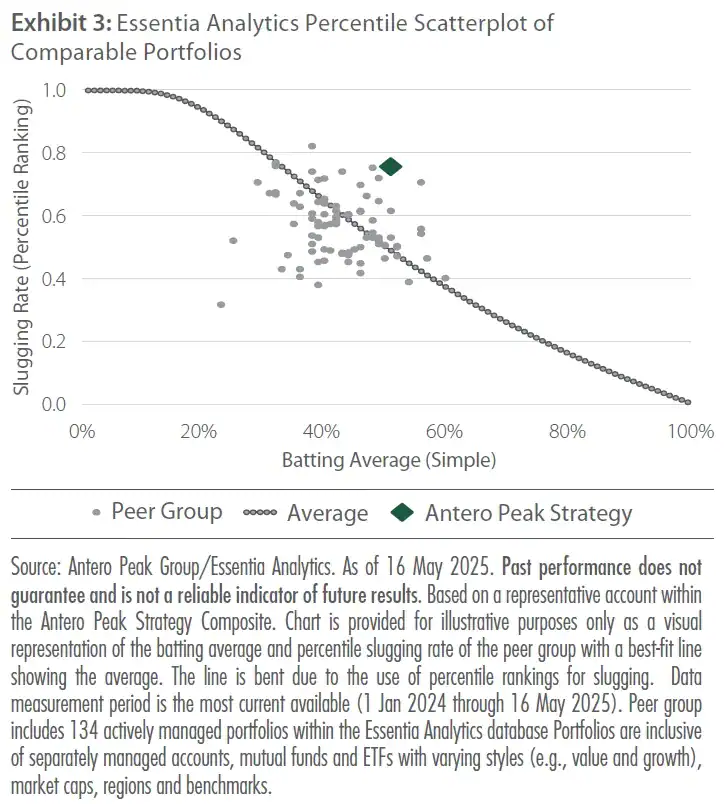

Revisiting Batting and Slugging

In the past, we have discussed the interplay of batting average and slugging percentage. Batting average represents the percentage of stocks active managers select that beat their benchmark. Slugging percentage, on the other hand, represents the amount gained on “winners,” divided by the amount lost on “losers.” For the three-year period ending March 31, 2025, the median batting average for large-cap active managers was 45%, with the top quartile around 51%.

The commonly held belief that success in investing stems from being right more than 50% of the time oversimplifies a much more nuanced reality. In our view, and consistent with insights from leading investors like Stanley Druckenmiller, the true driver of long-term alpha is not how often you’re right, but how much you make when you are.

Our investment process prioritizes slugging over batting average. By concentrating capital in high-conviction ideas identified through rigorous diligence and ongoing scrutiny, we seek to maximize the asymmetry between “winners” and “losers.” While this approach demands more upfront research and greater accountability, we believe it allows us to capture outsized returns when we are right and cut losses quickly when we’re not.

This high-conviction focused strategy has delivered tangible results. Just as baseball evolved beyond batting average to more predictive stats like on-base plus slugging (OPS), we believe investing should evolve to focus on the true drivers of outperformance: payoff ratios, position sizing and disciplined risk management. For example, for an equally weighted portfolio with ~10% single-name volatility, annual returns are surprisingly insensitive to batting average. Raising the batting average of this theoretical portfolio from 50% to 55% could add a mere 100bps of alpha, while improving the slugging has five times the impact.

We follow a disciplined approach grounded in two core principles: First, it is likely we will often be wrong; second, it is likely we will often be right. By fully embracing these truths, we focus on cutting losing positions quickly and sizing winning positions aggressively—an approach that has been key to driving our historical returns.

Culture is essential to executing this framework effectively. Team members must set aside personal bias and the need to be “right,” instead approaching decisions with humility and an acceptance that being wrong is part of the process. This mindset is not just tactical—it’s characterdriven. As Mike Abrashoff taught the sailors on his battleship, the team must prioritize the mission—in our case, the portfolio—over ego or personal ambition.

Industry norms—like low turnover and balanced sector exposure—can also hinder this dynamic, suppressing the payoff ratio and diluting the potential for outperformance. In fact, even with strong research and a decent hit rate (in the top quartile), these constraints often result in returns just above fees.

Since late 2017, our portfolio has delivered a 52% hit rate with a 300% absolute slugging ratio (240% relative to the S&P 500® Index).This validates our approach, which emphasizes rigorous time allocation to both our largest positions and those that are underperforming. Our process has helped us identify negative fundamental revisions early, which can lead a dramatically reduced hit rate on a go-forward basis. This enables us to objectively reduce risk fast. This focus on maximizing payoffs while minimizing losses is at the heart of our success.

A Focus on Alpha Over Beta

We believe there’s a tendency to categorize managers as value, growth, core and so on. To be clear, we are an alpha-focused portfolio. Our goal is to outperform the S&P 500® Index every year—regardless of style factors or market conditions. This objective is supported by the quantitative analysis of our performance. We’ve outperformed the S&P 500® Index in six of the last nine calendar years, including 1H 2025.

It’s particularly instructive to examine the sources of our tracking error over this period. Since inception, our asset-specific (idiosyncratic) risk has averaged approximately 55%. When combined with industry risk, nearly three-quarters of our relative tracking error can be attributed to stock and sector selection. In contrast, style factors such as value, growth, momentum and overall market direction—elements beyond our control— have had a far smaller impact. In our view, this is exactly what one should want from a true active manager: returns driven by security and sector selection, not by style drift or macro conditions.

![]()

Conclusion

Given recent client discussions and the increasing relevance and importance of artificial intelligence (AI), we thought we would share our perspective with you on the AI infrastructure landscape and why we think this tectonic shift in computing architecture will continue to provide a fertile investment period. We expect the underlying investment in infrastructure—silicon, energy and data pipelines—to remain very robust through 2030, to support the next wave of generative-AI growth, agentic AI. We hope you find our thoughts in the pages that follow useful and welcome the opportunity to discuss it further with you.

As you all know, as part of our personal and professional development, we keep a book club of sorts. This quarter, we read “It’s Your Ship.” An extended list can be viewed here.

Thank you for your continued trust and partnership.

AI Update

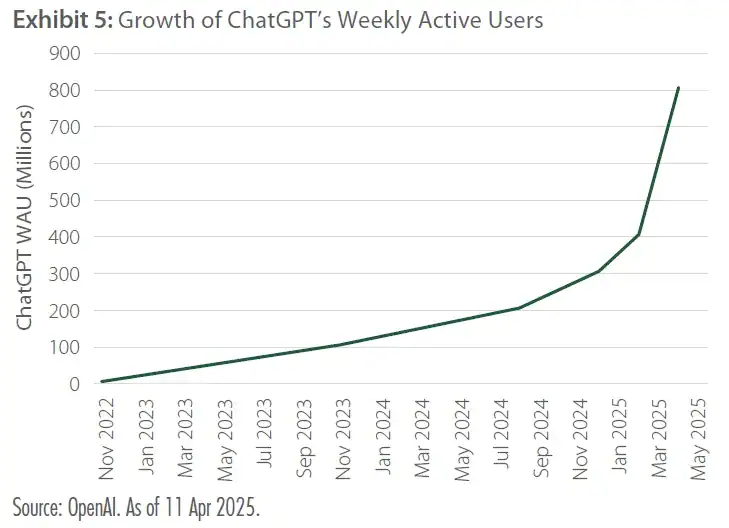

As we previously wrote about in our Q1 2023 investment letter, the Cambrian moment for AI decisively arrived in November 2022, with ChatGPT bursting onto the scene. ChatGPT has now scaled to nearly one billion monthly active users (MAUs) in under three years. This explosive expansion is reshaping capital markets, industrial supply chains and corporate strategy. For the investing community, it has also introduced a lot of volatility in AI stocks driven by 1) scepticism around the sustainability of infrastructure investment, 2) the return on investment (ROI) and use cases of AI, and 3) misconceptions surrounding the implications of new model architectures (e.g., the DeepSeek moment, which caused NVIDIA’s stock to decline nearly 17% in one day, the largest one-day market value decline for any stock in history).

These fears seem misplaced in hindsight, as each of the worries have been addressed in the past six months: 1) hyperscale capital expenditure has materially stepped up YTD, with Amazon, Google and Meta explicitly raising forecasts, and OpenAI beginning to ramp up the initial $100 billion phase of Stargate; 2) new agentic AI use cases have emerged, and revenue growth at Azure has materially accelerated; and 3) ChatGPT’s weekly active users (WAUs) have more than doubled YTD as reasoning models have driven a material inflection in tokens (usage).

AI Progress and Scaling

We do not envision a peak in AI spending on either training or inferencing through the end of the decade. The continued acceleration of generative AI (GenAI) is placing stress on the existing infrastructure, whether it’s the supply chains of the physical chips, the data centers or the power supply. We hypothesize that capital spending on AI infrastructure will remain frantic until we see the capabilities of AI driven by the largest deployed data center projects.

Hyperscalers, the neoclouds (OpenAI, xAI, Anthropic, etc.) and now large sovereign AI projects (HUMAIN) are investing in larger training clusters (to advance model capabilities) and in densifying their accelerated compute architectures to reduce token per unit costs of inferencing as agentic AI has driven a material acceleration in usage patterns.

Over the past 24 months, the industry has moved from a “train-at-any-cost” mindset toward an operating model where inference drives the majority of incremental demand. Yet, the two workloads remain interdependent and place markedly different stresses on silicon, networking and data center economics.

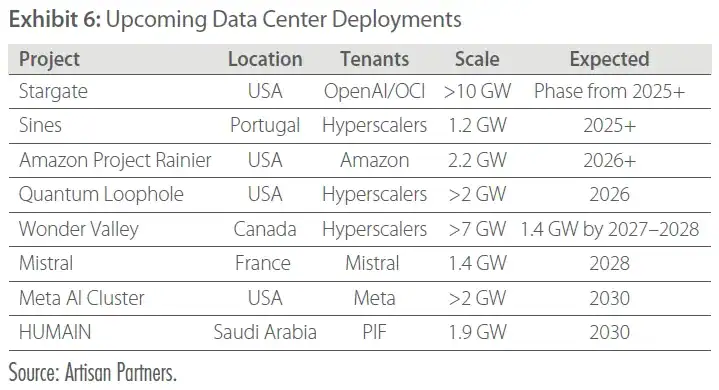

Our data center tracker has highlighted significant projects being planned for the next 4–5 years. The Stargate development alone is projected to potentially reach ~$500 billion of capital deployed and require 10 GWs of power. If we haven’t reached artificial general intelligence (AGI) following this scale of investment, we may see a slowdown thereafter. However, given the recent improvements in model capabilities, we think AI investment will remain robust through the end of the decade.

In the following section, we address the investment pipeline for large-scale training clusters and how expanding agentic use cases and positive ROI on spend will require more inferencing infrastructure.

Training Clusters Are Getting Bigger and Mega-Scale Infrastructure Projects Runways Are Long

Scaling laws, the continued growth in parameter counts and more compute-intensive post-training enhancements are all driving increasing spend on training large language models (LLMs). We continue to see larger training clusters being built, as we think this higher initial training cost can create incremental model capabilities that importantly reduce future inferencing costs. The superior compute capacity effectively unlocks new model architectures that can reduce per-token flops and materially reduce memory requirements.

The computational demands of LLMs scale with parameter count and data volume. The pre-training phase often involves petabyte-scale datasets. For example, Baidu’s (the Chinese search engine) foundational model Ernie 4.0 is widely reported as having multiple trillion parameters, while GPT-4 and DeepSeek-V3 are both reported to utilize 1 trillion parameters. Meta has delayed its Behemoth LLM multiple times, which is expected to be the largest version of the Llama 4 family, with a parameter count 400 billion.

Innovation has been apparent in post-training techniques, with the larger models supporting Mixture of Expert models (MoE), key-value cache compression and new distillation techniques. GPT-4 is an MoE model and has almost 10X the parameter count of GPT-3 (1.7 trillion versus 175 billion parameters). Effective new post-training techniques, such as reinforcement learning and chain of thought reasoning models, explore multiple solution paths that drive higher computational intensity. For companies that are trying to drive scale, investing in bigger training runs can help minimize lifetime model costs, which can compound over billions of queries.

The largest training cluster to date is the Colossus supercomputing cluster built out by xAI to power Grok3 and combines 200,000 GPUs. However, this will likely seem small in the coming years, given the number of hyperscalers developing plans and dedicating resources to develop greater than one million GPU clusters. For example, the initial $100 billion phase of the Stargate project will require 1.2 GWs of power (this alone is 20% of the entire power consumption of the NOVA area, which is the largest data center market globally and has been built up over decades).

Planned data center capacity expansions in the US have increased 150% over the past year—with almost 40 GWs of expansion currently in the planning and development phase across the country. Similarly in Europe and Asia, expansion plans have more than doubled in the last year. Some of the largest data center developments are yet to come online, with numerous 1 GW deployments scheduled to come online in the next few years. This is a huge amount of data center capacity that is still to be built, before any compute architecture is deployed. Sovereign AI factories are emerging, with multiple deals announced in the Middle East over several months. NVIDIA on its most recent earnings call confirmed this level of demand, noting nearly 100 AI factories built in the quarter and noting it sees visibility to projects requiring “tens of GW” of power.

Agentic AI Era Could Drive Material Spend in Inferencing InfrastructureWith Addition of Billions of New Computing Users

While blockbuster training cycles could continue to set narrative peaks, sustained value should accrue to vendors and utilities that can deliver low-latency, low-cost inference at scale. The transition from predictive models to autonomous agents is underway, unlocking new revenue pools but demanding exponential compute resources. NVIDIA’s Blackwell family—particularly GB200 and GB300 rack-scale systems—should provide the performance per watt and per token that make large-scale agentic AI economically feasible. We think enterprises that align their data center strategies with this roadmap could be positioned to capitalise on the next wave of AI-driven productivity.

Agentic AI systems can scale out by working together and can automate and execute tasks with minimal human oversight. When an agentic AI receives a task (e.g., "plan a marketing campaign," "write a Python script," “summarize or edit this document”), the input text is broken down into tokens (converting human text into numerical format that the model can process). This token generation is compounding.

In its Q3 FY2026 earnings call, Microsoft noted that it had processed 100 trillion tokens in the quarter, up ~5X YoY within Azure OpenAI services. At this scale and growth, Microsoft is already driving multi-exabytes of traffic. While inference often operates at lower precision (FP16, FP8) to reduce memory usage, this does not mitigate the cost, as agentic AI systems simply generate more tokens per query. For popular models like ChatGPT, serving billions of requests daily means the total compute cost can surpass the initial training expense.

To put it simply, the rise in agentic AI has effectively added billions of new users to existing compute infrastructure in less than two years, overloading the system and necessitating scaled accelerated compute infrastructure investment.

Large-Scale Investment Requires Positive ROI

Large-scale infrastructure investment needs to be met with positive ROI for it to be sustainable. Two proof points of this positive ROI include 1) better profitability among the hyperscalers; and 2) expansion of use cases of agentic AI that are driving tangible productivity gains. As an investor, this creates an interesting opportunity around the specialized hardware that is driving the inference cost per token lower, including GPUs and custom ASICs.

Hyperscalers have reported positive gross margin developments from their AI services investments. Azure and Amazon Web Services (AWS) have both called out how AI services gross margins are tracking above cloud gross margins at commensurate scale and the companies expect terminal margins to be similar. This was surprising to us, as initial cloud deployments had very strong total cost of ownership (TCO) arguments for lift and shift from existing on-premise workloads, while AI services have typically been net new workloads. This margin profile has allowed Microsoft to materially beat its internal margin expectations over the past 12 months, now guiding operating margins to be up YoY, versus an initial expectation for ~100bps dilution from its AI investments. We believe this tangible ROI realization has been a key driver of accelerated investment in the underlying compute architecture—we have seen large capital expenditure increases this year at Amazon, Google, Meta and Oracle. Meanwhile, Microsoft has shifted its capital expenditure plans in the next 12 months to favor compute chips (GPUs and CPUs) over data center infrastructure.

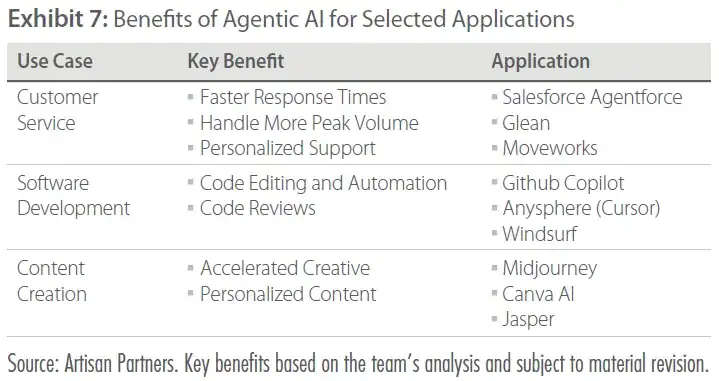

Expansion of Agentic AI Use Cases Are Helping Drive Productivity Enhancements

Industries continue to face pressure to improve efficiency and reduce costs, driving the adoption of automation and agentic AI use cases. Multiple Agent System (MAS) frameworks facilitate collaboration among agents, each specializing in tasks like natural language processing, computer vision or data analysis. MAS is crucial for applications requiring diverse expertise, such as software development or architectural design.

Customer service has emerged as a leading use case for agentic AI due to its ability to enhance efficiency and personalization. For example, Salesforce’s Agentforce has enabled AI agents to handle customer queries autonomously, escalating only complex issues to human agents. Adoption is not just siloed to the technology industry. Wells Fargo CIO, Chintan Mehta, revealed in an interview with VentureBeat that its AI assistant exceeded 245 million customer interactions in 2024, a more than 10-fold increase from 2023. Another interesting anecdote was that roughly 80% of 2024’s usage was in Spanish.

Amazon CEO, Andy Jassy, has been vocal of the productivity gains of GenAI in recent shareholder letters: “As we roll out more Generative AI and agents, it should change the way our work is done. We will need fewer people doing some of the jobs that are being done today, and more people doing other types of jobs…but in the next few years, we expect that this will reduce our total corporate workforce as we get efficiency gains from using AI extensively across the company.”

Rapidly growing new companies that leverage AI are also emerging and have the potential to disrupt existing industries, which has the potential to shape corporate strategies even among the mega-cap companies.

Within the software ecosystem, Cursor, developed by Anysphere, is growing rapidly in the AI coding assistant market, with reports that its annual recurring revenue (ARR) was $500 million and that figure has been doubling every two months. This growth is notably faster than many established software companies. We have seen M&A among software companies accelerate, with ServiceNow’s acquisition of agentic AI solution, Moveworks; Salesforce’s acquisition of data integration tool, Informatica; and OpenAI’s acquisition of AI coding tool, Windsurf.

Control over compute, energy, data and talent is becoming the new competitive moat for the mega-cap tech companies, and we have seen recent evidence of the mega-caps evaluating their corporate strategies in light of the shifting AI landscape. To us, it signals a critical inflection point, where AI capabilities can determine future market leadership across the technology sector. Recent reports of large potential investments at both Apple and Meta are indicative of this.

Apple has reportedly been in discussions to acquire Perplexity AI for $14 billion, which comes as a surprise given how nonacquisitive the company culture has been. The deal to acquire Beats for $3 billion in 2014 remains the largest deal in the company’s 49-year history. Apple has delayed Siri integration, and its Apple Intelligence features have not resonated well with users, compared to the AI adoption at ChatGPT. Integrating AI search capabilities into Safari and Siri to create a seamless, AI-enhanced user experience is likely a critical avenue that Apple would like to pursue, whether by organic or inorganic means (i.e., Perplexity).

An acquisition could help accelerate and augment its internal R&D effort, and a deal for Perplexity would be a defensive hedge for two reasons. Firstly, against a potential adverse ruling in the DOJ review of the Google search agreement (worth a reported $25 billion per year). Secondly, to position the company to compete against OpenAI, which has been actively working on hardware AI systems and successfully recruited Jony Ive from Apple. Ive was integral to the design philosophy of many of the iconic Apple devices, including the iMac, iPod, iPhone and iPad.

Meanwhile, Meta has seemingly shifted its Metaverse aspirations to achieving AGI. It has entered a deal to acquire a 49% non-voting stake in ScaleAI for $14.3 billion, with the ScaleAI co-founder, Alexandr Wang, joining Meta to lead a new “Superintelligence” lab. Meta is also in discussions to acquire venture capital fund NFDG, run by Nat Friedman and Daniel Gross, and reportedly is offering $100 million sign-on bonuses to key talent at OpenAI and in the field of GenAI research.

Meta’s ambitions seem more tied to acquiring high-quality training data through ScaleAI’s data labelling expertise and establishing a leadership position in advanced AI research. This may also be defensive in nature, given the challenges Meta has encountered with delayed model launches (Behemoth) and talent leakage to the frontier LLMs. However, we certainly see Meta as well placed to leverage this AI expertise in its advertising algorithms to drive conversion and new ad formats, as well as to accelerate innovation in its virtual reality/augmented reality (VR/AR) initiatives.

These large-scale strategic investments are likely to further catalyse the fundamental shift toward agentic AI systems over the next few years. As investors, we think the silicon and power providers are the biggest near-term beneficiaries, given they are the critical enablers and the primary bottlenecks in supply.

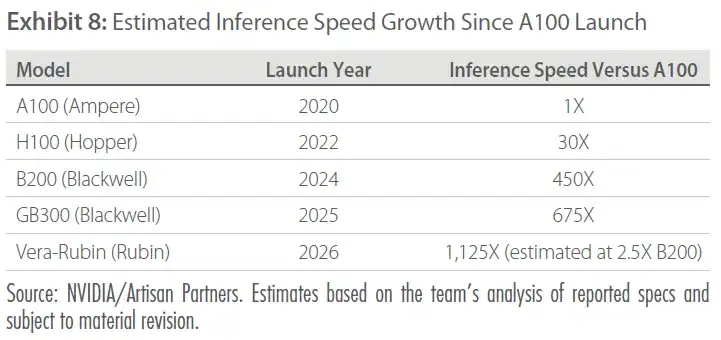

NVIDIA's Computing Architecture Is Helping Enable the Scale Up of Agentic AI

NVIDIA’s new accelerated compute architectures have enabled the scale up of agentic AI by reducing the inferencing costs per token. The B200 and B300 introduce higher density high-bandwidth memory (HBM) to remove bottlenecks for large context windows. For context, the GB300 (which is currently in testing and in volume shipments to hyperscalers in 2H 2025) is almost 700X more efficient at inferencing than the Ampere chips that were in production in 2020–2021.

NVIDIA’s management is emphasizing “lowest cost per inference token” as the design goal of new product launches. NVIDIA has demonstrated expertise in advancing the inferencing efficiency of its compute architecture, supported by an ambitious roadmap and accelerated product cadence that creates significant hurdles for competitors attempting to keep up. Following the debut of its Blackwell architecture in late 2024, the B300 (shipping in 2H 2025) is expected to deliver a 50% boost in inferencing efficiency with only a 10%–15% increase in system cost (ASP) versus the B200. This enhancement offers substantial performance-per-dollar advantages for hyperscalers building out large clusters over the next few years. The upcoming Vera-Rubin architecture in 2026 and Feynman in 2028 are both strategically designed to help optimize inferencing workloads, which we expect will further cement NVIDIA’s dominance.

On top of the rapid hardware progress, NVIDIA’s CUDA and TensorRT stack is also helping to boost inference throughput.

“Rapid advancements in NVIDIA software algorithms boosted Hopper inference throughput by an incredible 5X in one year and cut time to first token by 5X,” stated NVIDIA CFO Colette Kress on the Q3 FY2025 earnings call.

Key AI Investments

Historically, during tectonic computing shifts, the best investments throughout the cycle have typically been the technology leaders—whether it was IBM during the mainframe era, Intel and Microsoft during the PC era, Apple and Qualcomm during the smartphone era, or Amazon and Microsoft during the cloud computing era. We don’t see much reason to stray from the playbook for the era of accelerated compute. The highest margin businesses in semiconductors are NVIDIA and Broadcom, which are the technology leaders for AI infrastructure and the compute and the networking layers. NVIDIA leads in system computing, while Broadcom leads in ethernet networking and custom ASIC design (helping to make hyperscaler capital expenditure more efficient and conversely freeing up more budget for NVIDIA). Both are considered pivotal to the AI infrastructure build-out. We see a catalyst-rich path for both, NVIDIA as it accelerates its product roadmap and scales out its rack-level ecosystem, and for Broadcom as it begins to ramp its new Tomahawk 6 switch and as it hits a projected inflection point in new customer ASIC ramps from 2026–2028.

NVIDIA's Supply Improvements Provide Confidence on Near-Term Estimate Revisions

Demand for NVIDIA AI compute architecture is still outstripping supply, but the pain points in the supply chain have been shifting. We find it most accurate to build up our near-term estimates to solve for maximum capacity in the bottlenecks. In 2024, the primary constraint was chip-onwafer- on-substrate (CoWoS) capacity, so we rearchitected our revenue model to map CoWoS capacity upgrades at Taiwan Semiconductor (TSM).

Since late 2024, the bottleneck in supply has shifted to rack-scale deployments of the GB200 system, so we have started mapping our next 24-month estimates to supply capacity. NVIDIA recognizes revenue when it ships its GPUs to its original design manufacturer (ODM) partners (HonHai, Quanta and Wistron), but the ramp has been delayed over the past six months as the ODMs encountered multiple scaling issues of the rack-scale Blackwell deployments due to the complex system integration and high-power density format. For context, the NVL72 form factor has power consumption of 120 kW per rack, far exceeding typical data center rack power (12 kW–20 kW) and even the high-density H100/H200 racks. Issues were also encountered surrounding the ramping of the liquid cooling systems and the backplane connectors.

For context around the complexity of the compute architecture, within a GB200 NVL 72, there are 108 chips (72 Blackwell GPUs, 36 Grace CPUs), 18 NVSwithch5 ASICs, 72 Infiniband NICs, 18 Bluefield-3 DPUs, 36 ConnectX-8 Super NICs, 162 OSFP cages, 36 PSUs, 5 BBUs, 300 supercapacitors, and ~5,000 copper cables, liquid cooling plates and connectors. The GB300 systems have even more discrete. The GB300 systems will have even more components and a larger memory attach footprint.

As a result, rack yields from December 2024 to May 2025 were sub-50%, resulting in smaller upward revenue revisions at NVIDIA. As we look forward, we expect bigger revenue revisions as supply ramps up. Our conversations with ODMs and suppliers suggest there have been rapid yield improvements in recent months, with rack-level yields likely to reach 80% in Q3. More recent channel checks from the sell side have suggested the ODM ecosystem has recently reached production rates close to 1,000 racks per week. This is a huge improvement from the 200–300 racks per month in February and March of this year.

As rack yields improve, we expect the next shortage in the supply chain to move to component supply, where we would expect to further rework and redefine our forecasting methodology to better align with the supply chain bottlenecks.

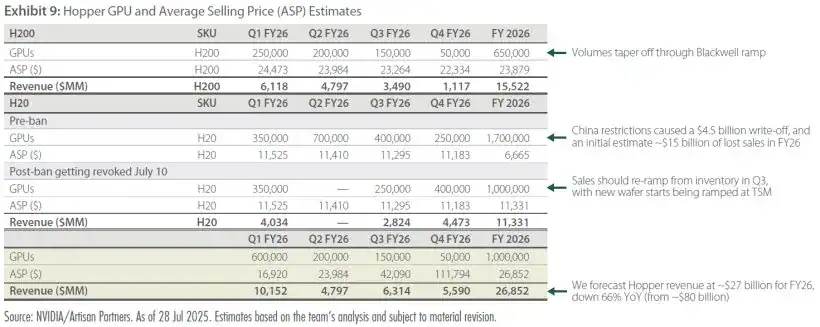

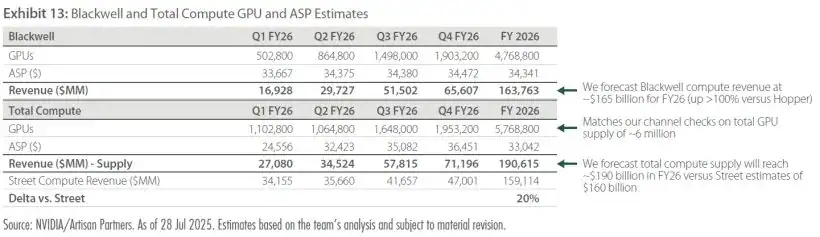

Exhibits 9–13 illustrate a breakdown of our compute model build for NVIDIA for 2025, which highlights the complexity and scale of the business.

Hopper revenues have started to decline from ~$80 billion in 2024 to ~$27 billion in 2025 as the Blackwell systems ramp up.

"When Blackwell starts shipping in volume, you couldn't give Hoppers away,” NVIDIA CEO Jensen Huang stated at GTC 2025.

NVIDIA also has had to cease supply of its H20 chip, which was optimized to comply with prior export restrictions, which cost the company ~$15 billion of lost sales for the year and the opportunity to compete in the ~$50 billion China accelerated compute total addressable market (TAM).

B200 chips have been shipping since late in Q4 FY25 for NVIDIA, with $30 billion of revenues recognized in the first two quarters. As ODMs experienced yield issues with the rack-scale GB200 format, hyperscalers were aggressively ordering discrete chips and the prior generation HGX form factor.

We see a material ramp in rack-scale deployments in 2H of the year as rack yields improve. The GB200 is expected to be the biggest driver of revenue growth at NVIDIA this year.

We expect GB300 to ramp up in 2H as well, with rack-scale production ramping up in late Q4 2025. This system has been optimized for inferencing, with more memory attach driving a 50% improvement in inferencing effeciency. We expect the GB300 to drive revenue growth through 1H of calendar year 2026, when Vera-Rubin arrives.

In aggregate, we see scope for material positive revenue revisions as supply improves through the rest of the year, and we would expect fewer yield issues with the GB300 form factor, given the experience gained from the supply chain over the past 12 months.

Broadcom

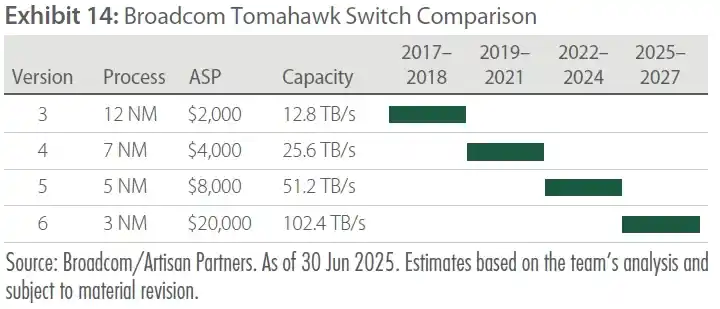

One of the things that we believe is underappreciated about both NVIDIA and Broadcom is their reliability with execution. Since its initial Tesla data center chip, NVIDIA has innovated consistently on a two-year cadence, which has accelerated recently. Broadcom too has consistently introduced its upgraded version of its high-performance ethernet family of switches, labeled Tomahawk. These chips are essential to driving down compute as they deliver higher bandwidth and lower latency for efficient networking in scaled environments.

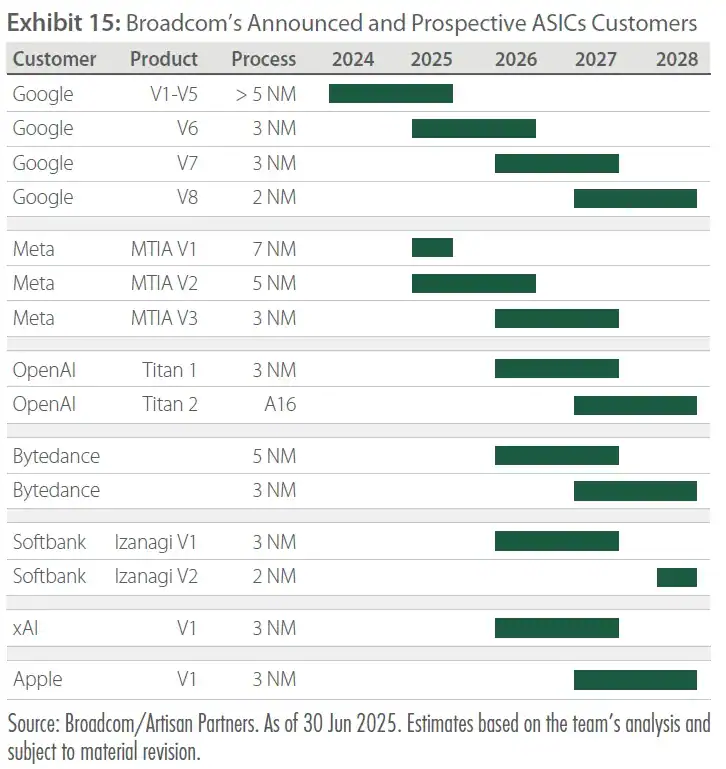

Broadcom has two major catalysts for its AI business over the coming years: 1) the ramp of its high-performance Tomahawk 6 Ethernet switch; and 2) the ramp of multiple new ASIC customers, to augment its scaled out TPU footprint with Google.

On the switch side, the new Tomahawk 6 chip can now support both scale-up and scale-out environments, which will be essential for some of the large-scale training clusters coming online over the next few years, with Hock Tan, Broadcom CEO, noting there are multiple 1 million + XPU clusters in development. Due to the inherent cost savings the chip will provide, Broadcom has been able to charge more than its typical 2X ASP uplift per generation with the TPU 6 family.

On the ASIC side, demand for ASICs is increasing, as hyperscalers want to lower the cost of ownership versus NVIDIA’s high-margin GPUs. ASICs typically solve for lower cost in internal-facing workloads that have consistent features. Broadcom has the broadest portfolio of networking IP and can be fastest to market at scale for its customer base. We think this experience and consistency of manufacturing at scale positions Broadcom to win multiple new customers over the coming years.

All of the large hyperscalers currently have ASICs in production at varying levels of success (Amazon and Microsoft utilize Marvell, Alchip and GUC). For Broadcom specifically, the company has announced three hyperscale customers for its custom ASICs, including Google, Meta and ByteDance, and additionally the company has four prospects in advanced development for its own next-generation AI accelerators, though these are not yet classified as customers. Broadcom highlights a software asset management just for its three announced customers in the range of $60 billion to $90 billion by 2027. We think the other customers include OpenAI, Apple, xAI and Softbank, which we expect to ramp from 2026– 2028 and support 40% AI revenue CAGR through 2029.

Read more hedge fund letters here